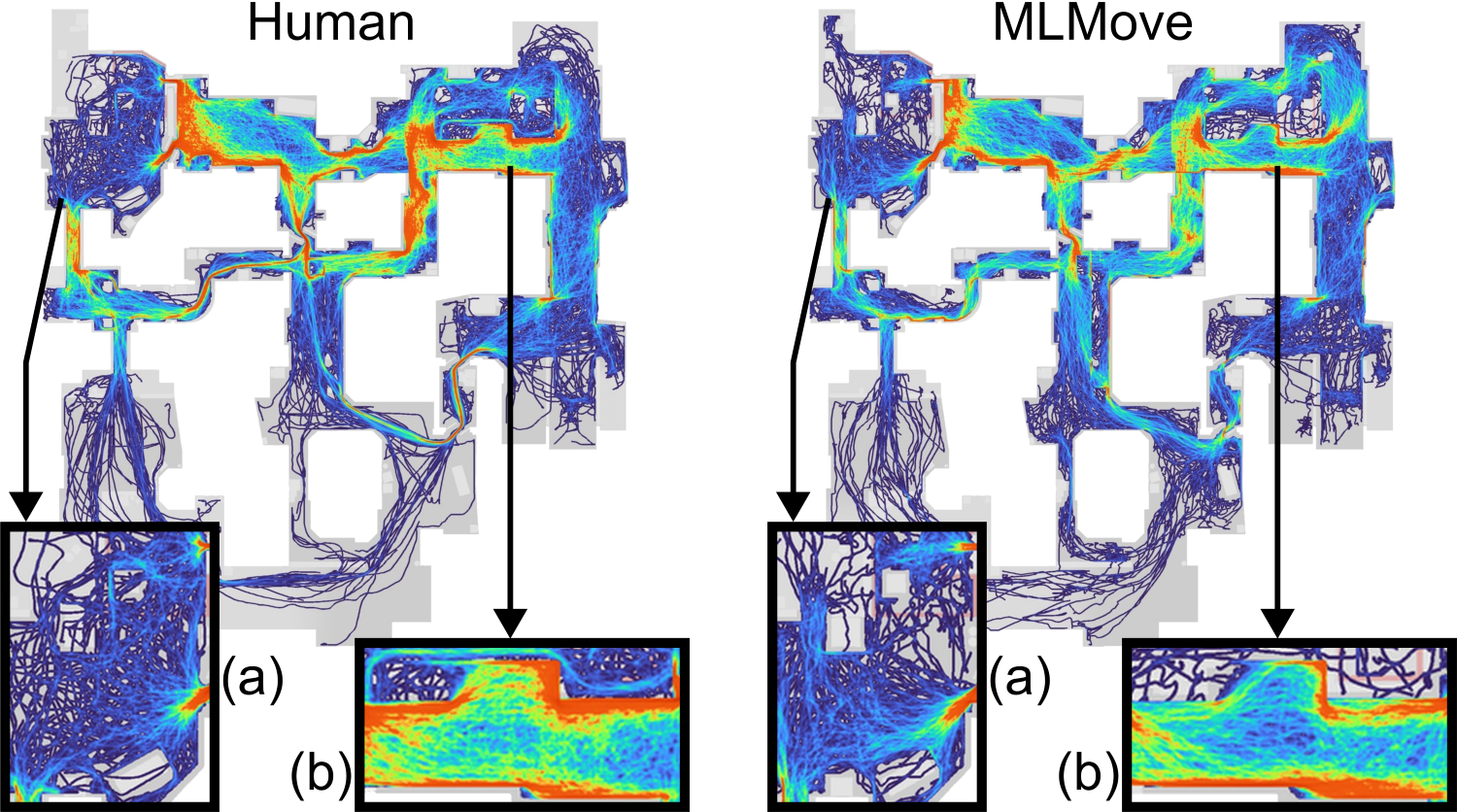

For the last 3 years of my Ph.D., I modeled complex human behaviors in a commercial video game, Counter-Strike. Using imitation learning (IL), I built bots that play in the style of human players. I performed the below series of projects that culminated in my belief that imitating human behavior is a stepping stone to understanding human behavior. This led to my creation of a data pipeline for processing human traces and an IL bot (MLMove) trained to imitate the traces. This research is addressed in Learning to Move Like Professional Counter-Strike Players, a paper published at SCA 2024.

- Computing Good Crosshair Placements Using RLpbr's GPU Ray Tracing (2021) - Compute analytics for where humans are likely to look based on map geometry

- Reaction Times and Crosshair Positions Aren't Enough to Catch Cheaters (2022) - Isolated player behavior analytics are insufficient to fully model complex human behavior

- A Hand-Crafted Structure for Imitation Learning (2022) - Hand-crafted bots are a good structure for comprehensive modeling of human behavior in Counter-Strike

- Revisiting Structure vs. Learning (2023) - Learned components within the bot structure must have access to the full game state, as narrowly defined bot behavior components fail to capture the full complexity of human behavior

I'm interested in many types of applications that involve modeling human behavior in the games space, including catching cheaters and other malicious actors in video games. I worked on anti-cheat with Activision and presented the work at GDC 2023: Hallucinations: Baiting Cheaters Into Self-Identifying by Reversing Detection. This paper proposes hallucinations with configurable behavior to catch cheaters. Three popular cheat programs fall for the hallucinations in Call of Duty.

I was advised by Professors Kayvon Fatahalian and Pat Hanrahan and supported by a NSF Graduate Research Fellowship and a Stanford Graduate Fellowship in Science and Engineering.

Prior Research: Programming Languages For Hardware Accelerators

Before transitioning to modeling human behavior through AI agents, my research focused on programming languages for hardware accelerators. Below are my dissertation, publications, and presentations from MLMove and that work.